I’ve recently been using NodeJS build website scrapers quickly, and usually in less than 100 lines of code. This tutorial shows how you can easily create your own scraper using two NodeJs modules: request and cheerio. The same code can easily be adapted to perform complex tasks like completing and submitting a form.

Node Modules

Assuming you already have NodeJS installed, we require two additional modules to be installed:

- request – The simplest way possible to make

httpandhttpscalls - cheerio – A fast, flexible, and lean implementation of core jQuery designed specifically for the server

You can install them by using the npm (Node Package Manager) command-line tool:

The Scraper

Once installed, we’re ready to create our scraper. In my tutorial, we use the request module to fetch a webpage, and use cheerio to select the elements we need. Since cheerio is an implementation of jQuery, we can use the same selectors to select and extract information from the page we just scraped.

On lines 6-10, we set the default settings we want to use. Since most pages will drop cookies, it’s a good idea to keep jar set to true. This will mean that any cookies the page sets will be passed to subsequent requests.

On line 14, we set the URL we want to scrape. Lines 15-17 show an example of custom headers we can pass. Here, you can set anything from authentication headers to content-type, cookies and user-agent.

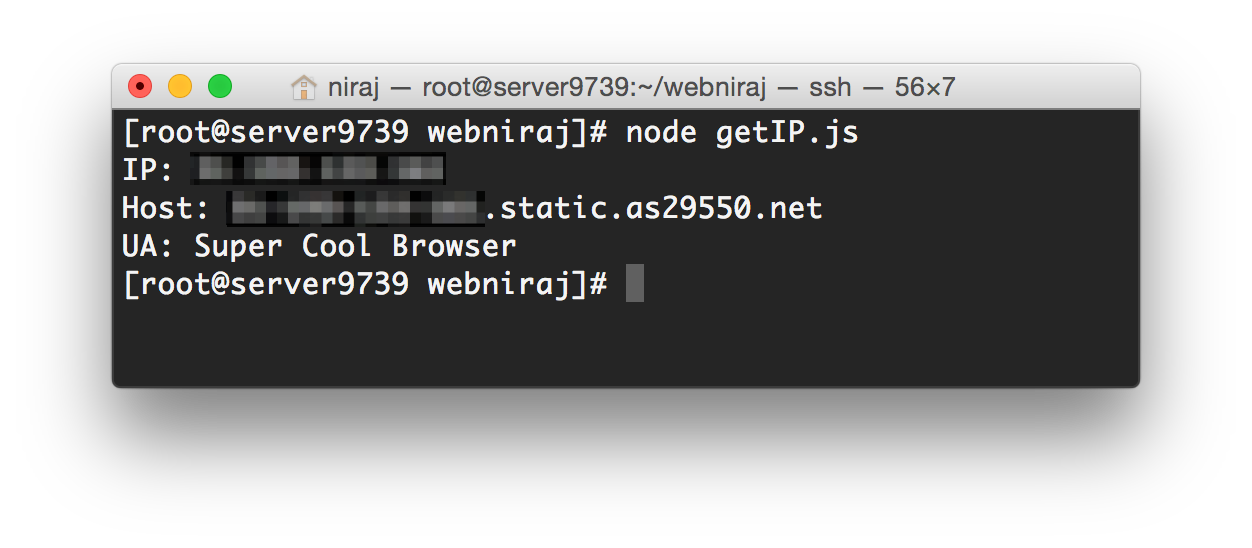

On lines 19-27, we load the content of the page into cheerio and then select the elements we’re interested in. If it works correctly, the script will return your IP address, host-name and user-agent. Here’s an example:

POST Requests

The above is an example of a GET request, but POST requests are possible too. You can make POST requests by calling req.post and pass in form data using the form variable in the POST function. Example:

When I do post requests, how do I deal with the security tokens and sessions and stuff. I can’t really just do a regular post request, it seems I need to pass in extra security related random values. Thanks in advance! 👍

If you need to rely on a page-generated CSRF token or similar, you can use a GET request to get the initial page, and then use Cheerio to get the contents of a form, token etc. And then pass that on to a subsequent POST request.

Here is a very rough example:

request.get({ url: "https://domain.com/page", jar: true, followAllRedirects: true }, function(err, resp, body){ var $ = cheerio.load(body); var token = $('[name="_token"]').val(); request.post({ url: "https://domain.com/post", form: { '_token': token }, jar: "true", followAllRedirects: true }, function(error, response, body){ // do something with the result }); // end of post }); // end of get